This post necessarily has spoilers about arcosphere balancing in Factorio's Space Exploration mod. If you're playing this mod and want to work it out yourself then please come back after you've finished it.

The Space Exploration mod

for Factorio is well known for introducing new logistics challenges. While

most mods introduce complicated recipes with many stages, they primarily

require building more stuff. Although SE introduces new recipes and several

traditional "more complicated" chains including byproducts and recycling, it

also introduces new logistics problems: not all resources are available on

every planet, sunlight isn't available in the same amount everywhere, and not

all production facilities can be built in all locations, making the real

challenge obtaining and moving the materials around so that they can be

combined. The final logistics challenge, however, is by far the most

interesting. It introduces a new item called an arcosphere, which is available

in eight flavors named for greek letters: epsilon  , lambda

, lambda

, gamma

, gamma  , xi

, xi  , zeta

, zeta  , theta

, theta  , phi

, phi  ,

and omega

,

and omega  . Unlike other ingredients, these arcospheres are not consumed in

production, but rather they are converted between flavors in order to produce

the desired products. For example, a particular tier 3 deep space science card

requires regular materials (naquium plates and significant data) and a pair of

arcospheres, and it produces the science card plus a different pair of

arcospheres. To complicate things, there is a limited number of arcospheres

available, as they must be collected with a process that has diminishing

returns, so to produce large amounts of science they must be converted back to

the original input arcospheres through auxiliary recipes called folding and

inversion. Finally, to avoid a static solution, the production recipes also

come in two variants which accept the same inputs but produce different

arcospheres, with the specific variant selected being controlled by RNG when

production finishes.

. Unlike other ingredients, these arcospheres are not consumed in

production, but rather they are converted between flavors in order to produce

the desired products. For example, a particular tier 3 deep space science card

requires regular materials (naquium plates and significant data) and a pair of

arcospheres, and it produces the science card plus a different pair of

arcospheres. To complicate things, there is a limited number of arcospheres

available, as they must be collected with a process that has diminishing

returns, so to produce large amounts of science they must be converted back to

the original input arcospheres through auxiliary recipes called folding and

inversion. Finally, to avoid a static solution, the production recipes also

come in two variants which accept the same inputs but produce different

arcospheres, with the specific variant selected being controlled by RNG when

production finishes.

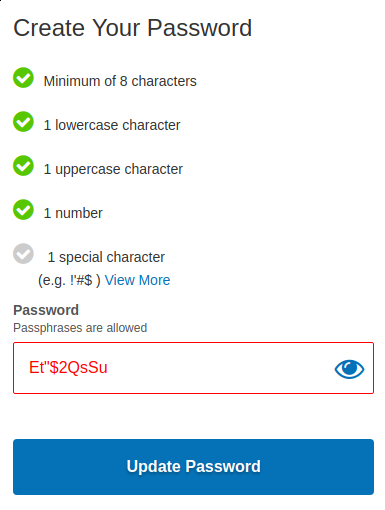

The recipes

To start off we need a consistent way to refer to the various recipes, so we define the following names for the folds and inversions:

These names will be used throughout to refer to specific folds and inversions in order to simplify communicating which steps are required to convert a set of arcospheres into a different set of arcospheres. The names are arbitrary, but it is a nice coincidence that I is for inversion and I also is the next letter after H, and j is used by electrical engineers to represent i, and J is used to name the complementary inversion with respect to I.

While we need names to refer to the folds and inversions, we don't need to worry about any of the production recipes themselves, because they will produce a non-deterministic imbalance in the arcospheres. Instead we will be trying to take an arbitrary imbalance and correct it using only the above tools.

The Basic Circuit Solution

We won't by analyzing this one, but the most basic solution is to observe a

pattern in the folding recipes, specifically that four folds can be combined

to convert one arcosphere into another specific arcosphere, with the help of

two catalyst arcospheres which are returned unmodified. For example, by

combining folds A, C, G, and D, we can convert two  arcospheres into

two

arcospheres into

two  arcospheres. There is also a complementary combination of B, E,

F, and H, which converts in the opposite direction. There are four such pairs:

arcospheres. There is also a complementary combination of B, E,

F, and H, which converts in the opposite direction. There are four such pairs:

,

,  ,

,

and

and  . Using the

inversion recipes, two of these pairs can be converted into the other two

pairs as well.

. Using the

inversion recipes, two of these pairs can be converted into the other two

pairs as well.

The solution is to measure the total number of arcospheres and do two things.

First, determine the balance between each pair: if  run the

forward operation, else if

run the

forward operation, else if  run the reverse operation,

allowing us to maintain roughly the same number of each side of a pair.

Second, sum the two pairs on one side of an inversion and compare with the

other side. Convert the larger sum into the smaller sum. This solution is

dynamic and adapts to the arbitrary conversion that happens while running

arcosphere-based recipes, and it is effective for finishing the game, but it

does have a high static cost: the catalyst arcospheres used in balancing each

pair cannot be used elsewhere in a naive solution, resulting in 16 unavailable

arcospheres. A requester-based solution also naively results in buffering a

number of input arcospheres for each direction of each pair, at least one but

usually two arcospheres of each type, raising the cost to 32 total unavailable

arcospheres. It is relatively inexpensive to obtain about 100 total

arcospheres so this is not prohibitive, but it is still unsatisfying. Smarter

implementations of this approach can reduce this static cost but we won't be

looking into it any further than that.

run the reverse operation,

allowing us to maintain roughly the same number of each side of a pair.

Second, sum the two pairs on one side of an inversion and compare with the

other side. Convert the larger sum into the smaller sum. This solution is

dynamic and adapts to the arbitrary conversion that happens while running

arcosphere-based recipes, and it is effective for finishing the game, but it

does have a high static cost: the catalyst arcospheres used in balancing each

pair cannot be used elsewhere in a naive solution, resulting in 16 unavailable

arcospheres. A requester-based solution also naively results in buffering a

number of input arcospheres for each direction of each pair, at least one but

usually two arcospheres of each type, raising the cost to 32 total unavailable

arcospheres. It is relatively inexpensive to obtain about 100 total

arcospheres so this is not prohibitive, but it is still unsatisfying. Smarter

implementations of this approach can reduce this static cost but we won't be

looking into it any further than that.

Mathematical Representation

Replacing the game mechanics with an abstraction, we could represent our

arcospheres as an 8-dimensional vector, with each coordinate storing the total

number of arcospheres of that type. Initially we might think to restrict the

coordinates to the non-negative integers, but when we think about what a fold

does (for example, subtract one  , subtract one

, subtract one  , add one

, add one

and add one

and add one  ), it is going to be more useful to have the

coordinates be restricted only to integers so that we can interpret a positive

coordinate as a surplus and a negative coordinate as a deficit of the

corresponding arcosphere. The vector's coordinates map to arcosphere flavors

like this:

), it is going to be more useful to have the

coordinates be restricted only to integers so that we can interpret a positive

coordinate as a surplus and a negative coordinate as a deficit of the

corresponding arcosphere. The vector's coordinates map to arcosphere flavors

like this:

Note that the order of the coordinates, much like the order of the folds earlier, is arbitrary and just corresponds to the order in which we first wrote them down (they're not even in the same order as the game shows them, which was definitely a mistake, but it is too late to change things now).

Because a single fold adds and subtracts a static amount--independent of the current amounts of arcospheres--we could represent it as an affine transform, but as all of the interesting information is in the translation vector it is much easier to characterize them in terms of this translation vector only. The folds and inversions are commutative, as a result, because vector addition is commutative. So we can define vectors corresponding to each fold and inversion like so:

Written in this way there are a lot of observations we can make immediately:

- The entries of each fold or inversion vector sum to zero

These observations are important because we learn that although we have 8

coordinates, the dimension of our vector space is only 7, as the final entry

is determined entirely by the earlier entries. We also can conclude that all

of the folds, inversions, and production recipes which do not create or

destroy arcospheres lie in the hyperplane defined by  ,

albeit only at points in the hyperplane with integer coordinates. We also

confirm that the two inversion recipes are complementary, and we learn that

the folding recipes can be broken into two sets of four, where each set sums

to zero as well. The dimension of the operations contained in each these sets

is therefore 3 (not 4). This also allows us to construct negated versions of

each vector, for example:

,

albeit only at points in the hyperplane with integer coordinates. We also

confirm that the two inversion recipes are complementary, and we learn that

the folding recipes can be broken into two sets of four, where each set sums

to zero as well. The dimension of the operations contained in each these sets

is therefore 3 (not 4). This also allows us to construct negated versions of

each vector, for example:  . We will

give those two sets names so that we can refer to them later:

. We will

give those two sets names so that we can refer to them later:

Now we can form a complete plan for solving the balancing problem: we will run the production recipes, then we will obtain an error vector representing the mis-balance between arcospheres, with positive coordinates indicating a surplus and negative coordinates representing a deficit. We will use the inverse of our fold matrix to find a representation of the error vector in fold coordinates, negate that vector, and run each fold the number of times indicated by the corresponding coordinate to balance the total number of arcospheres.

Naturally, there are some complications and the above steps require significant modification to actually find a solution.

Problem 1: Coordinates Must be Integers

This will be more of a recurring issue that requires each step to be modified rather than something we can address up front: both the numbers of arcospheres and the numbers of folds must be integers, so we have two different lattices to work with. We can allow negative values for either as we're representing the difference between current number of arcospheres and a balanced set, and we learned earlier how to represent a negative fold, so for the purposes of algebra we can permit negative numbers of folds, and when it comes time to apply them we can convert those values into an equivalent positive number of different folds. For the most part we will begin with a strategy that would be applicable in a regular vector space and then adapt it to work on the lattice. At the end we will need to analyze the effectiveness of our approach.

Problem 2: The Folds Are Not Orthogonal

The fold vectors are not all orthogonal, so we can't use them as an effective set of basis elements for our fold lattice. We can use the gram-schmidt process to generate orthogonal vectors, but because we require integer coordinates we cannot normalize them. Instead we will have different length basis vectors and we will have to account for this in later steps. You can choose a lot of possible starting points, but we can make several observations first to end up with simpler basis definitions:

With this in mind, we know that our basis elements will be broken into three

groups: one will be a basis over  , one will be a basis over

, one will be a basis over  , and one

will be a basis over

, and one

will be a basis over  , which we will choose to be

, which we will choose to be

.

.

The gram-schmidt process (ignoring normalization) in a regular vector space,

given an input set of vectors  , proceeds like this:

, proceeds like this:

and so on, constructing each orthogonal vector by projecting the new vector

onto all previous orthogonal elements and subtracting them out to obtain an

orthogonal residual. However, the ratio of dot products may be a rational

number rather than an integer, so this approach will not work for calculating

orthogonal vectors either. We must modify it, so to improve readability we

introduce two new expressions,  ,

which is the dot product of a vector with the

,

which is the dot product of a vector with the  th basis vector, and

th basis vector, and

, which is the dot product of the

, which is the dot product of the  th basis vector with

itself. We can write the expression for each vector space equivalent of each

basis vector like so:

th basis vector with

itself. We can write the expression for each vector space equivalent of each

basis vector like so:

Because the basis vector can be any length, we can multiply the left side by a

value  to ensure that all coefficients are integers:

to ensure that all coefficients are integers:

This choice of  has the advantage of producing the minimum length

has the advantage of producing the minimum length

as well.

as well.

Problem 3: Negative Folds

Some of the coefficients above may be negative. We've already discovered how

to represent the negative/additive inverse of any fold during our earlier

observations, but we will restate it here. Because each set of folds sums to

zero, the negative of a given fold is the other elements in its set:

. For a combination of folds from both sets,

to negate the sum we take the complement of each vector with respect to its set

and add them together:

. For a combination of folds from both sets,

to negate the sum we take the complement of each vector with respect to its set

and add them together:

.

We won't write down all the negative vectors here, but we will write down the

negatives of the basis vectors once we have found them all.

.

We won't write down all the negative vectors here, but we will write down the

negatives of the basis vectors once we have found them all.

Finding an Orthogonal Basis

Using the above we can finally find our orthogonal basis. You can pick any

vectors and work through it, but we can simplify the math by choosing as many

already orthogonal vectors as possible to start. We saw that

and

and  form an orthogonal pair, so they are our choice for

the first two basis vectors:

form an orthogonal pair, so they are our choice for

the first two basis vectors:

Next we can pick either of the two remaining vectors in  , so let's choose

, so let's choose

:

:

Analogously, for  we can compute three more basis vectors:

we can compute three more basis vectors:

And, finally we can add the inversion basis vector we chose earlier to fill out the set of 7 that we needed:

As we determined earlier that the hyperplane containing all of our vectors was 7 dimensions, at this point we know that we have found all of the orthogonal basis vectors. Since we already know we will need negatives of these basis vectors, it is helpful to compute them now:

Problem 4: The Inverse Change of Basis

Armed with an orthogonal set of basis vectors for the fold space arranged into

a matrix  where each column is

where each column is  , we can convert a vector whose

coordinates are numbers of folds

, we can convert a vector whose

coordinates are numbers of folds  into an arcosphere error vector

into an arcosphere error vector

through a simple matrix multiplication:

through a simple matrix multiplication:

However, in this problem we wish to compute  for an arbitrary

for an arbitrary

, and

, and  is not a square matrix. Because the columns of

is not a square matrix. Because the columns of  are

orthogonal, we know

are

orthogonal, we know  is invertible, so we can use the pseudoinverse to

find

is invertible, so we can use the pseudoinverse to

find

We also know that because the columns of  are orthogonal,

are orthogonal,  is a

diagonal matrix whose nonzero entries are

is a

diagonal matrix whose nonzero entries are  from earlier, the dot product

of each basis vector with itself, and its inverse is a diagonal matrix whose

entries are the reciprocals of

from earlier, the dot product

of each basis vector with itself, and its inverse is a diagonal matrix whose

entries are the reciprocals of  . We can therefore simplify the

expression to obtain the components of

. We can therefore simplify the

expression to obtain the components of  as

as

As always, we are restricted to integer numbers of folds, so we must decide how best to handle it. In this case, we will chose to round towards zero (corresponding with integer division in most programming languages and in game), but other reasonable choices include:

- round to nearest integer,

- take the ceiling, or

- scale the error vector to obtain integers similar to how we did in finding a basis.

We will consider the difference between integer division and rounding while bounding the residual error. Scaling the error vector seems promising, as it is generated by a production recipe, so scaling it amounts to running the production recipe multiple times. However, each recipe always has two forms and swaps between arcosphere flavors in the output randomly. So at best a scaling approach could be used to do average case balancing, but the error vector amounts to a random walk, as the error for each round of production accumulates. Analyzing and bounding the walk's distance from the origin with a fixed inversion based on the average case is beyond our scope here, but we note that in early trials this often caused the error vector to diverge rather than return to the origin or remain within the unit cell of the folding lattice, as is expected for what appears to be a higher dimensional random walk.

Balancing the Spheres

Combining everything above, we can finally construct an algorithm for dynamically balancing the arcospheres:

- We will run the production recipes for some number of steps (to be determined)

- We will measure the arcosphere error vector as the difference between the number of arcospheres of one flavor and the total number divided by 8 (the number of flavors)

- We will compute

as above, applying the floor function to each

coordinate

as above, applying the floor function to each

coordinate - We will express

as a number of primitive fold and inversion

based on each coordinate, rewriting negative coordinates as different positive

folds using the negative expressions for each basis vector noted earlier

as a number of primitive fold and inversion

based on each coordinate, rewriting negative coordinates as different positive

folds using the negative expressions for each basis vector noted earlier - We will run each fold and inversion the specified number of times,

producing a new error vector of

- Ideally this new error vector has a smaller L1 norm than the starting vector

Bounding the Error

A number of times we have taken the engineer's approach of solving a problem

in a vector space with real-valued coordinates and just hamfisting it into the

lattice. Let's do that again as a quick sanity check on  . If we

remove the floor function and pretend everything can be a real number we find:

. If we

remove the floor function and pretend everything can be a real number we find:

The second equation contains an intimidating expression, but luckily the

definition of the pseudoinverse can help us obtain the third equation. One

property is that for a matrix  with orthogonal columns and pseudoinverse

with orthogonal columns and pseudoinverse

, we have

, we have  . Furthermore any linear combination of the

columns of

. Furthermore any linear combination of the

columns of  will also map to itself, as scalar multiplication commutes with

matrix multiplication. Therefore, as

will also map to itself, as scalar multiplication commutes with

matrix multiplication. Therefore, as  is a linear combination of the

basis vectors,

is a linear combination of the

basis vectors,  .

.

Note that these steps and properties are not true for the lattice we're

actually working with. At this stage we're just checking that if we were in a

friendlier system, our work would yield the behavior we wanted. Instead, in

our situation we will define our lattice vector  as

as

with the  function used here corresponding to our choice of strategy for

converting the values of

function used here corresponding to our choice of strategy for

converting the values of  and

and  to an integer. In game it is

currently done with an integer division that rounds towards zero, but earlier

we also suggested that it could be a round to nearest integer division as

well. Our lattice basis vectors define a unit cell with which we can tile the

arcosphere error lattice, and we can define a residual region that contains

any error vectors for which

to an integer. In game it is

currently done with an integer division that rounds towards zero, but earlier

we also suggested that it could be a round to nearest integer division as

well. Our lattice basis vectors define a unit cell with which we can tile the

arcosphere error lattice, and we can define a residual region that contains

any error vectors for which  , that is, vectors for which

our algorithm does not identify a corresponding lattice vector. Ideally the

residual region is exactly the unit cell, but that may be hard to determine

and will depend on the choice of

, that is, vectors for which

our algorithm does not identify a corresponding lattice vector. Ideally the

residual region is exactly the unit cell, but that may be hard to determine

and will depend on the choice of  , so instead we will do all of our

calculations considering only the residual region.

, so instead we will do all of our

calculations considering only the residual region.

So to bound our error, we must find the vector of maximum L1 norm, subject to

the constraint that it is inside the residual region. This is an integer

programming problem and and could be expressed and solved as

such, but such problems are NP-hard. However, because our basis vectors are

quite short and we have a small number of dimensions, we can instead iterate

over all of the points inside the residual region and calculate their L1

norms, and then find the maximum. Even though this has exponential scaling,

for this specific case we can write the program in an hour or two and brute

force it in under a second, which is fast enough. We can also find all of the

vectors with this norm and find the largest possible imbalance for any one

arcosphere as these will help us determine how many arcospheres are necessary

to operate our system. By inspection, I expect the maximum absolute value of

any coordinate inside the residual region to be no more than 3, because any

vector with a 4 and -4 pair will surely have  for at least one

for at least one  as all the basis vectors have -1, 0, and 1 as their only coordinates and have

as all the basis vectors have -1, 0, and 1 as their only coordinates and have

of 4 or 8. To be conservative we can iterate over a region including -4

and 4, for a total of 9^7 = 4782969 vectors or even expand to -5 and 5 for

11^7 = 19487171 points. The full source for a program written to do this

search is available in a gist on

github

because it ended up over a

hundred lines, which is a bit too much to include here inline.

of 4 or 8. To be conservative we can iterate over a region including -4

and 4, for a total of 9^7 = 4782969 vectors or even expand to -5 and 5 for

11^7 = 19487171 points. The full source for a program written to do this

search is available in a gist on

github

because it ended up over a

hundred lines, which is a bit too much to include here inline.

After running the program we find a lot of useful answers:

- The maximum error vector L1 norm is 12

- 64 vectors achieve this maximum imbalance, as a combination of the values -2, -2, -1, -1, 1, 1, 2, 2 (but not every ordering)

- There are only 6893 integer coordinates with

for all

for all

- The maximum absolute value of any coordinate inside the residual region is 3 as expected

So for any set of production recipes that requires  arcospheres of flavor

arcospheres of flavor

, having

, having  total arcospheres of that flavor is sufficient to ensure

that the above algorithm can always balance the arcospheres enough to run the

recipe set again. So, for example, a system with 80 total arcospheres will

ideally have 10 of each flavor and will have no fewer than 7 of any flavor

after balancing, so it can be used to run a set of recipes that consumes up to

7 of any one flavor, without needing to reuse the result arcospheres, before

balancing must be performed on complete set of 80 arcospheres again, at which

point we can run the same set of recipes (using 7 arcospheres of that type)

again. In practice, the game also has a limitation on circuit logic that

excludes signals with zero value from being included in "each" operations in

arithmetic combinators (for good reason), so we must ensure any count never

hits zero and cannot consume more than 6 of any one flavor in this system.

Some production recipes can be grouped together, such as the tier 3 deep

science cards, which are all consumed together in the same quantities, but

others like naquium tesseracts must run independently. This should be used to

decide how many production steps to run in step 1 of our balancing algorithm

earlier.

total arcospheres of that flavor is sufficient to ensure

that the above algorithm can always balance the arcospheres enough to run the

recipe set again. So, for example, a system with 80 total arcospheres will

ideally have 10 of each flavor and will have no fewer than 7 of any flavor

after balancing, so it can be used to run a set of recipes that consumes up to

7 of any one flavor, without needing to reuse the result arcospheres, before

balancing must be performed on complete set of 80 arcospheres again, at which

point we can run the same set of recipes (using 7 arcospheres of that type)

again. In practice, the game also has a limitation on circuit logic that

excludes signals with zero value from being included in "each" operations in

arithmetic combinators (for good reason), so we must ensure any count never

hits zero and cannot consume more than 6 of any one flavor in this system.

Some production recipes can be grouped together, such as the tier 3 deep

science cards, which are all consumed together in the same quantities, but

others like naquium tesseracts must run independently. This should be used to

decide how many production steps to run in step 1 of our balancing algorithm

earlier.

Earlier we also mentioned that we wanted to consider the impact of using

rounding rather than truncation. If we modify the program to use

residual_round instead of residual_floor as get_residual then we find

improvements:

- The maximum error vector L1 norm becomes 8

- Only 24 vectors have this much imbalance, as a combination of only 1s and -1s

- The maximum error for any one arcosphere is reduced to 2

This corresponds to one more recipe per arcosphere flavor, which would improve the amount of time spent producing rather than balancing. That may justify the effort of implementing correct rounding using circuits in game for this calculation.

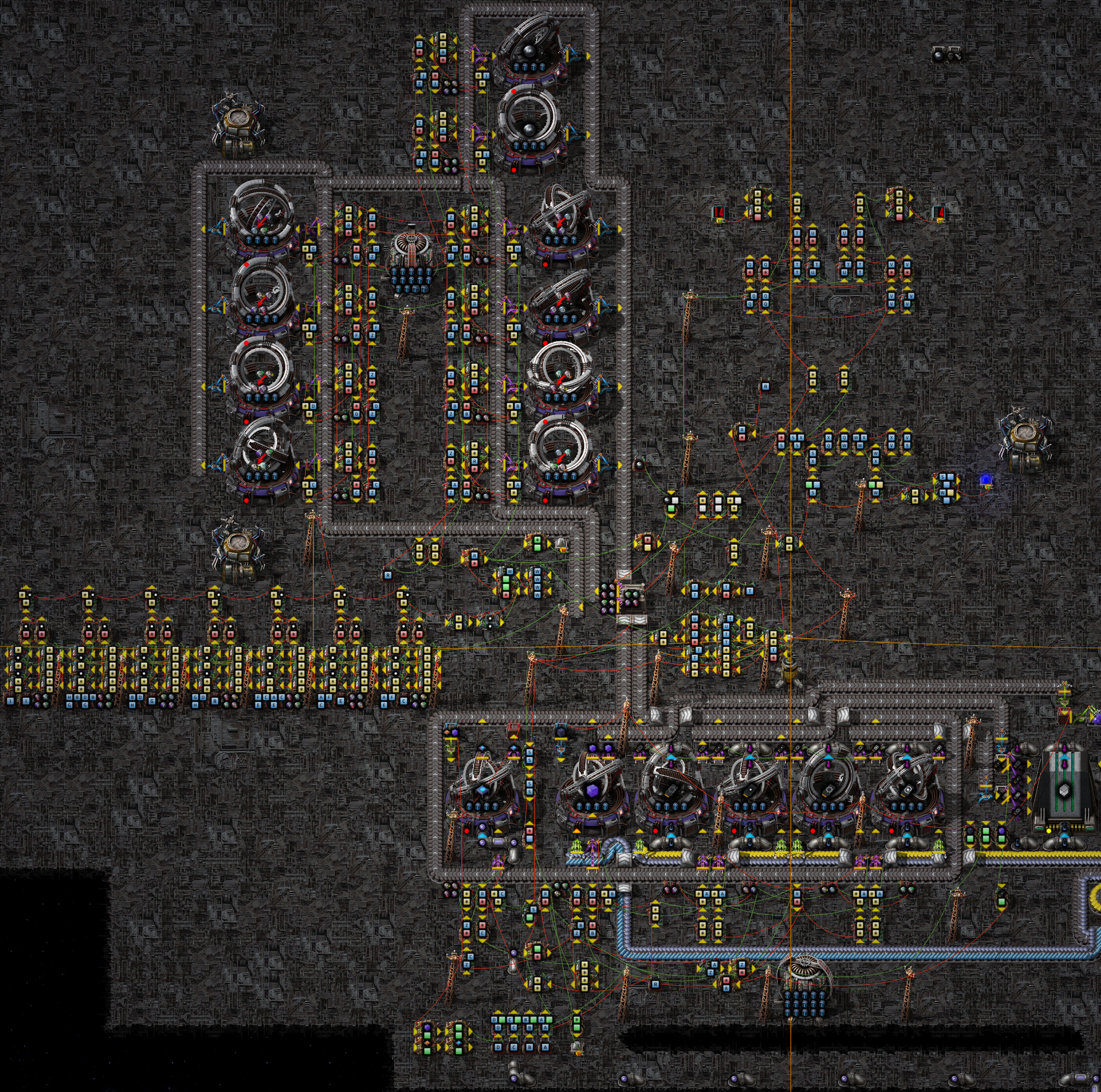

Final Design

In game, I built a machine to employ the above algorithm:

We'll break down the details of the design in part 2 (soon there will be a link here), since the actual implementation is really only concerned with game mechanics. But as a quick preview it has both synchronous and asynchronous logic sections and uses three separate state machines working together to coordinate a balancing phase, a production phase, and a measurement phase. It has exactly one gravimetrics facility for each folding and inversion recipe that is triggered the correct number of times to perform balancing, and it has no static arcosphere cost: there are no arcospheres trapped as catalysts in any loops or kept in a buffer chest or machine input waiting for their balancer to be enabled. It's a cool design and very satisfying to have built in game, because of this analysis supporting its correctness, but it is quite slow, especially compared to a naive, bot based ratio balancer like the one described at the start. Maybe I will be able to optimize or rebuild it by the time part 2 is finished and posted though and see if it can compare favorably with more effort put in there.

denotes the number of

words,

denotes the number of

words,  denotes the correct word, and

denotes the correct word, and  denotes a word on

the list (in some consistent order). A simple approach suggests that we want to

use the maximum likelihood (ML) estimate of

denotes a word on

the list (in some consistent order). A simple approach suggests that we want to

use the maximum likelihood (ML) estimate of

. In this sense, "information" is what

you gain by making an observation, and it is measured by how it affects the

possible states for a latent variable to take. For more compact notation, let's

define

. In this sense, "information" is what

you gain by making an observation, and it is measured by how it affects the

possible states for a latent variable to take. For more compact notation, let's

define  as the "result" random variable for a particular word,

telling us how many letters matched, taking values

as the "result" random variable for a particular word,

telling us how many letters matched, taking values  , where

, where  is the length of words in the current puzzle. Then, we can change our

selection criteria to pick the maximum mutual information:

is the length of words in the current puzzle. Then, we can change our

selection criteria to pick the maximum mutual information:

, apart from it being the

entropy after observing

, apart from it being the

entropy after observing  's value. Conditional entropy is distinct

from conditional probability in a subtle way: conditional probability is based

on a specific observation, such as

's value. Conditional entropy is distinct

from conditional probability in a subtle way: conditional probability is based

on a specific observation, such as  , but conditional

entropy is based on all possible observations and reflects how many

possible system configurations there are after making an observation,

regardless of what its value is. It's a sum of the resulting entropy after each

possible observation, weighted by the probability of that observation

happening:

, but conditional

entropy is based on all possible observations and reflects how many

possible system configurations there are after making an observation,

regardless of what its value is. It's a sum of the resulting entropy after each

possible observation, weighted by the probability of that observation

happening:

and

and  . We know that

. We know that

. If we define the similarity

function

. If we define the similarity

function  to be the number of letters that match in place and

value for two words, and we define the group of sets

to be the number of letters that match in place and

value for two words, and we define the group of sets  as the candidate sets, then we can find the probability distribution for

as the candidate sets, then we can find the probability distribution for

because there

are no duplicates, and therefore this equation matches our intuition for the

probability of each word being an exact match. With the definition of

because there

are no duplicates, and therefore this equation matches our intuition for the

probability of each word being an exact match. With the definition of  in hand, all that remains is finding

in hand, all that remains is finding  , but luckily our definition

for

, but luckily our definition

for  has already solved this problem! If

has already solved this problem! If  , then we know that the

true solution is uniformly distributed in

, then we know that the

true solution is uniformly distributed in

must also be in the observed

set for all previous iterations. This is equivalent to taking the set

intersection, but the notation gets even messier than we have so far, so I

won't list all the details here.

must also be in the observed

set for all previous iterations. This is equivalent to taking the set

intersection, but the notation gets even messier than we have so far, so I

won't list all the details here. operations for each guess), although a computer can do it

effectively. Personally, I just go through and find all the emoticons to remove

duds and then find a word that has one or two overlaps with others for my first

guess, and the field narrows down very quickly.

operations for each guess), although a computer can do it

effectively. Personally, I just go through and find all the emoticons to remove

duds and then find a word that has one or two overlaps with others for my first

guess, and the field narrows down very quickly.